Akida examples

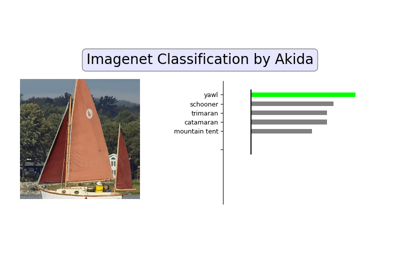

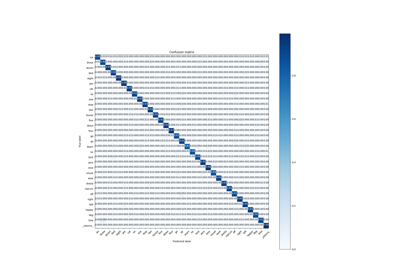

To learn how to use Akida, the QuantizeML and CNN2SNN toolkits and check the Akida accelerator performance against some commonly used datasets please refer to the sections below.

General examples

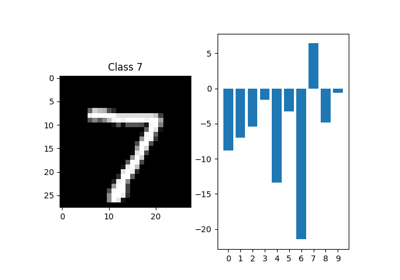

Quantization

Spatiotemporal examples

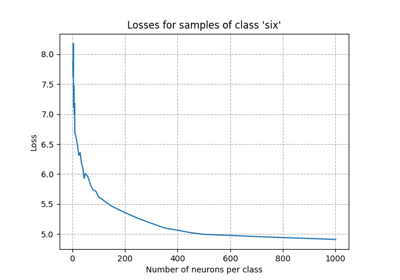

Efficient online eye tracking with a lightweight spatiotemporal network and event cameras

Efficient online eye tracking with a lightweight spatiotemporal network and event cameras